Note: after a Summer “pause” mostly dedicated to a mid-term exhibition and publication of our joint design-research, we are preparing to dive again into the I&IC project for 18 months. This will first happen next November through a couple of new workshops with guest designers/partners (Dev Joshi from Random International at ECAL and Sascha Pohflepp at HEAD). We will take the occasion to further test different approaches and ideas about “The cloud”. We will then move into following steps of our work, focused around the development of a few “counter-propositional” artifacts.

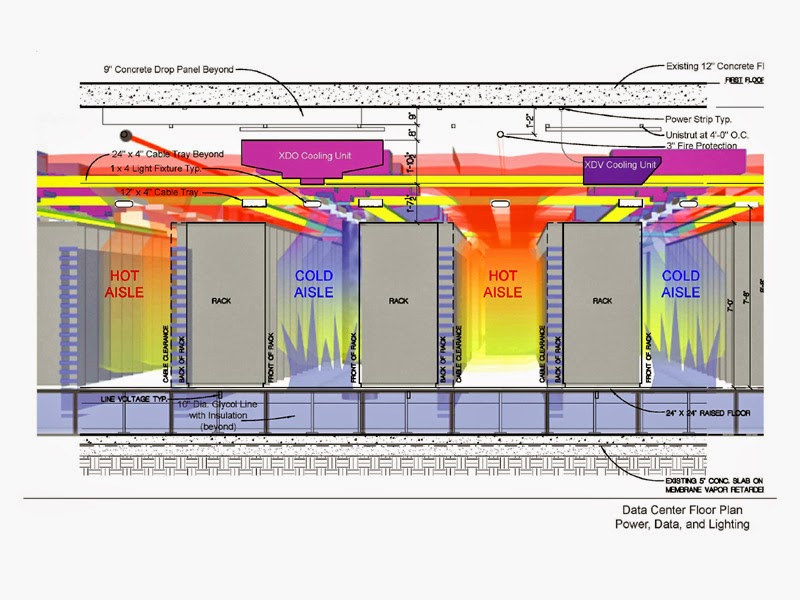

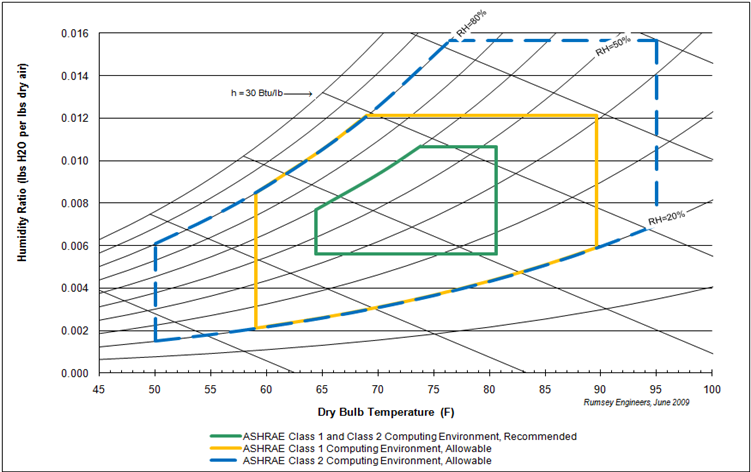

But before diving again, I take the occasion to reblog an article by James Bridle published earlier this year and that could act as an excellent reminder of our initial questions, so as a good way to relaunch our research. Interestingly, Brindle focuses on the infrastructural aspects of the cloud (mostly pointing out the “hard” parts of it), which may in fact become the main focus of our research as well in this second phase. Scaled down certainly…

Via Icon (thanks Lucien for the reference)

—–

There’s something comforting about the wispy metaphor for the network that underpins most aspects of our daily lives. It’s easy to forget the reality of its vast physical infrastructure