On the way to the development of different artifacts for the design research (Inhabiting & Interfacing the Cloud(s)), we’ll need to work with our own “personal cloud”. The first obvious reason is that we’ll need a common personal platform to exchange research documents, thoughts and work together between the different (geographically distributed) partners involved in the project. We are thus our own first case study. The second one is to exemplify the key components about how a small data center / cloud infrastructure might be assembled today and learn from it.

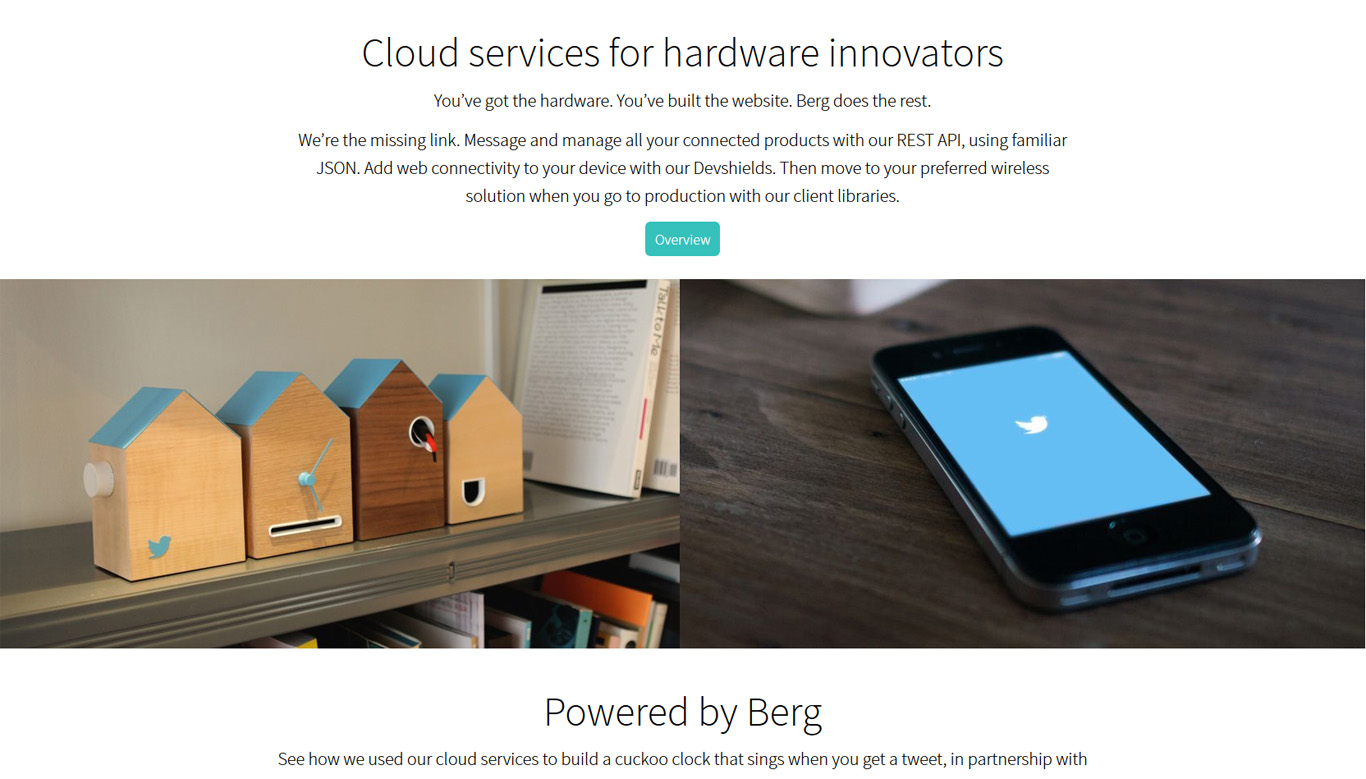

But then, more importantly, this will become necessary for two other main objectives: first one is that we would like to give access to “cloud” tools to the design, architecture and makers communities, so that they can start play and transform a dedicated infrastructure (and this won’t of course be possible with all type of systems); second one will possibly be for the “hands on” and “prototyping” parts of our research, for which we’ll need an accessible cloud based architecture to modify or customize (this includes both the software and hardware), around which some open developments, networked objects, new interfaces, apps, etc. could be developed.

We are therefore looking for a (personal) cloud technology upon which we could keep (personal) control and this has revealed not so easy to find. Cloud computing is usually sold as a service that “users” are just supposed to… use, rather than a tool to customize or with which to play.

We would like of course to decide what to do with the data we’ll provide and produce, we’ll need also to keep access to the code so to develop the functionalities that will certainly be desired later. Additionally, we would like to provide some sort of API/libraries dedicated to specific users (designers, makers, researchers, scientists, etc.) and finally, it would be nice too if the selected system could provide computing functionalities, as we don’t want to focus only on data storage and sharing functions.

Our “shopping list” for technology could therefore look like this:

- On the software side, we’ll obviously need an open source “cloud” system, that runs on an open OS as well, to which we’ll be able to contribute and upon which we’ll develop our own extensions and projects.

- If a “hobbyists/makers/designers/…” community already exists around this technology, this would be a great plus.

- We’ll need a small and scalable system architecture. Almost “Torrent” like, which would allow us to keep a very decentralized architecture.

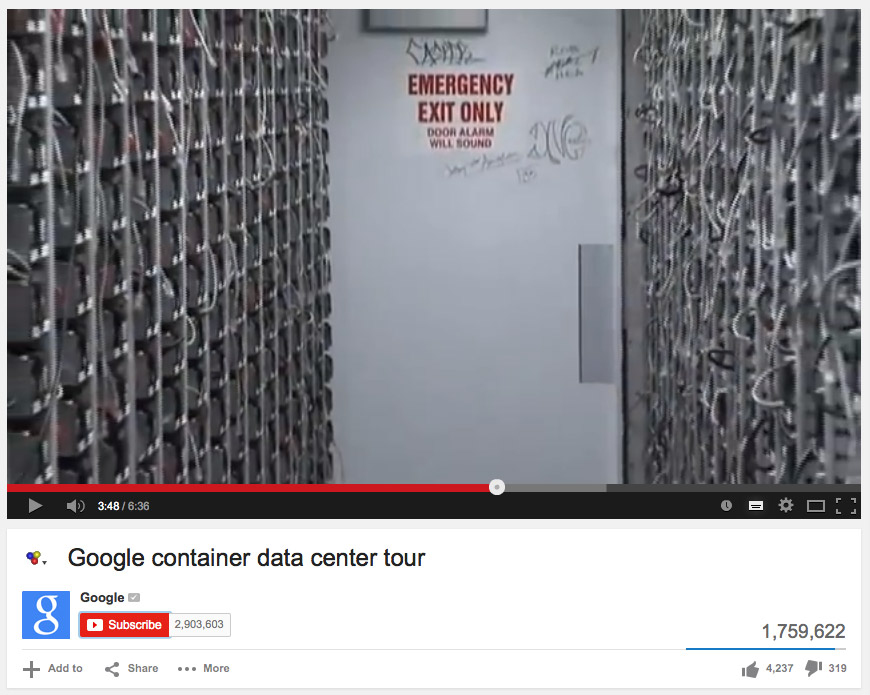

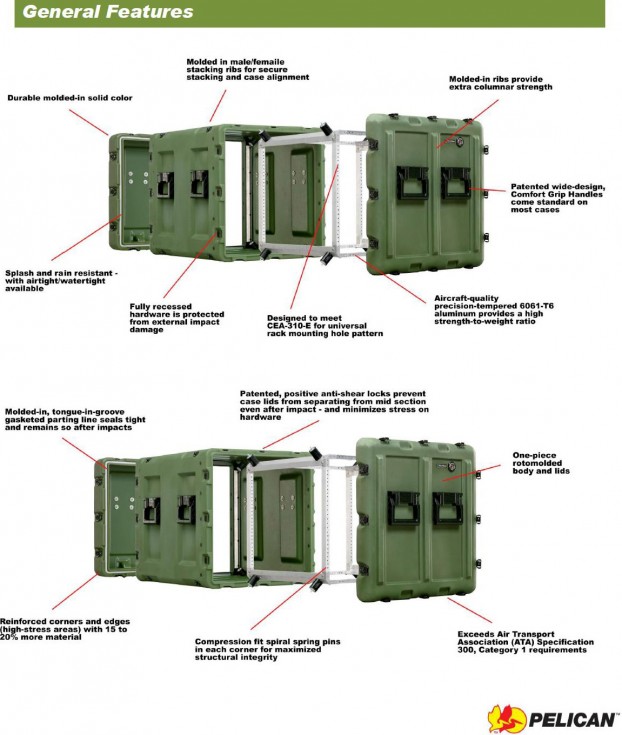

- Even if small, our hardware and infrastructure must be exemplary of what a “data center” is, today, so to help understand it. It happens that the same concepts are present at different scales and that, very simply said, large data centers just look like scaled, more complex/technical and improved small ones… Our data center should use therefore existing norms (physical: the “U“, rack and servers’ cabinet units), air (or water) circulation to cool the servers, temperature monitoring, hardware redundancy (raid disks, internet access, energy plugs).

We are scouting on these questions of software and hardware for some time now and at the time I’m writing this post, our I&IC blog is hosted on our own system in our own little “data center” at the EPFL-ECAL Lab…

We’ve been through different technologies that were interesting, yet for different reasons, we didn’t really find the ideal system though. I can mention here a few of the systems we’ve checked more deeply (Christian Babski will certainly comment this post in more details later so to help better understand our choice as he, the scientist, finally made it after several discussions):

We’ve seen some technologies that are based on a Torrent (or similar) architecture, like BitTorrent Sync (that kill the need for data centers) or a crowdfunded one like Space Monkeys. Both answer to our interest in highly decentralized system architectures and infrastructure. Yet they don’t offer development capabilities. For the same reason, we’re not taking into account open NAS ones like FreeNAS because they don’t offer processing functions and focus on passive data storage.

We should also mention one more time arkOS, that is both a very light and interesting alternative hardware/software solution, but that happens to be probably too light for our goals (too low computing and storage capacities on Raspberry Pis).

We’ve then continued with the evaluation of software that were used by corporations or large cloud solutions and that became open recently, for some parts and for some of then. Openstack seems to be the name that pops up more often. It offers many of the functionalities we were looking for, but yet doesn’t have an API and is certainly too low level for the design community, heavy to manage either. Same observation for Riak CS (scalability, but only file storage) that is linked with Amazon S3.

Of course, we shouldn’t forget to mention here that even big proprietary solutions (like Dropbox, Google Drive, Amazon S3 or EC2, etc. or even Facebook, Twitter and the likes that are typical cloud based services) offer APIs for developers. But this is under the same “user agreement” that is used for their other “free” services (subject to change, but where the “problem” is mentioned in the agreement: you’re a user and will remain a user). These options are not relevant in our context.

We’ve finally almost found what we were looking for with OwnCloud, which is an open source cloud software that can run on a Linux OS, not too low level, with a community of developers and hobbyists, APIs, the standard cloud functionalities already well developed with desktop, iOS and Android clients, some apps. They recently added a server scalability that opens toward highly decentralized and scalable system architecture, which makes it compatible with our Preliminary intentions.

The only minus points would be that unlike “torrent like” architectures, it maintains a centralized management of data and doesn’t offer distributed computing. This means that once you’ve set up your own personal cloud, you’ll become the person who will manage the “agreements” with the “users”. This later point could possibly be addressed differently though, now that scalability and decentralization has been added. Regarding computing, as the system is installed on a computer server, with an OS, we maintain server side computing capacities, if necessary.

This will become the technical base of some of our future developments.

-

Please see also the following related posts:

Setting up our own (small size) personal cloud infrastructure. Part #2, components

Setting up our own (small size) personal cloud infrastructure. Part #3, components