Note: we will start a new I&IC workshop in two weeks (02-06.02) that will be led by the architects of ALICE laboratory (EPFL), under the direction of Prof. Dieter Dietz, doctoral assistant Thomas Favre-Bulle, architect scientist-lecturer Caroline Dionne and architect studio director Rudi Nieveen. During this workshop, we will mainly investigate the territorial dimension(s) of the cloud, so as distributed “domestic” scenarios that will develop symbiosis between small decentralized personal data centers and the act of inhabiting. We will also look toward a possible urban dimension for these data centers. The workshop is open to master and bachelor students of architecture (EPFL), on a voluntary basis (it is not part of the cursus).

A second workshop will also be organized by ALICE during the same week on a related topic (see the downloadable pdf below). Both workshops will take place at the EPFL-ECAL Lab.

I introduce below the brief that has been distributed to the students by ALICE.

Inhabiting the Cloud(s)

Wondering about interaction design, architecture and the virtual? Wish to improve your reactivity and design skills?

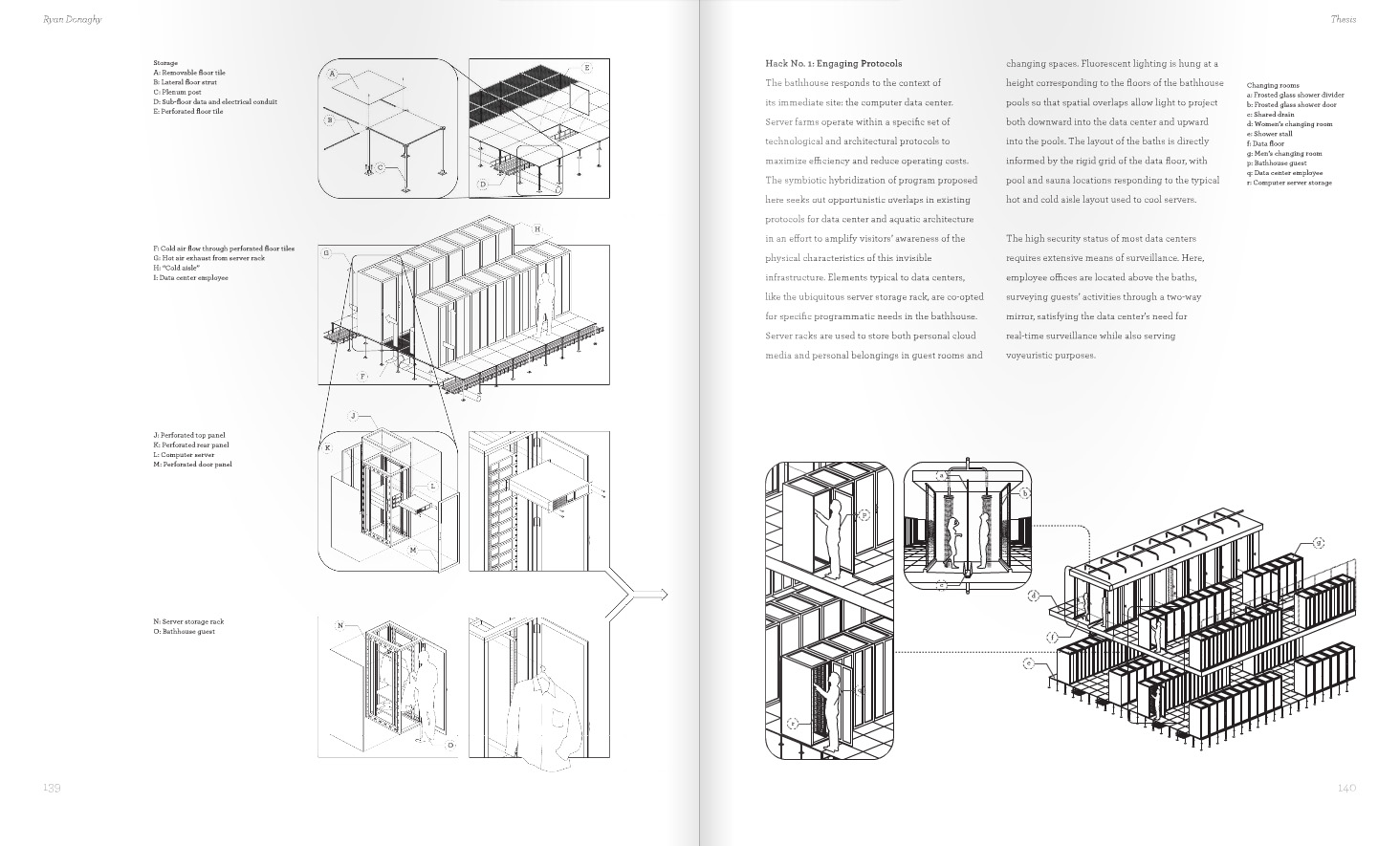

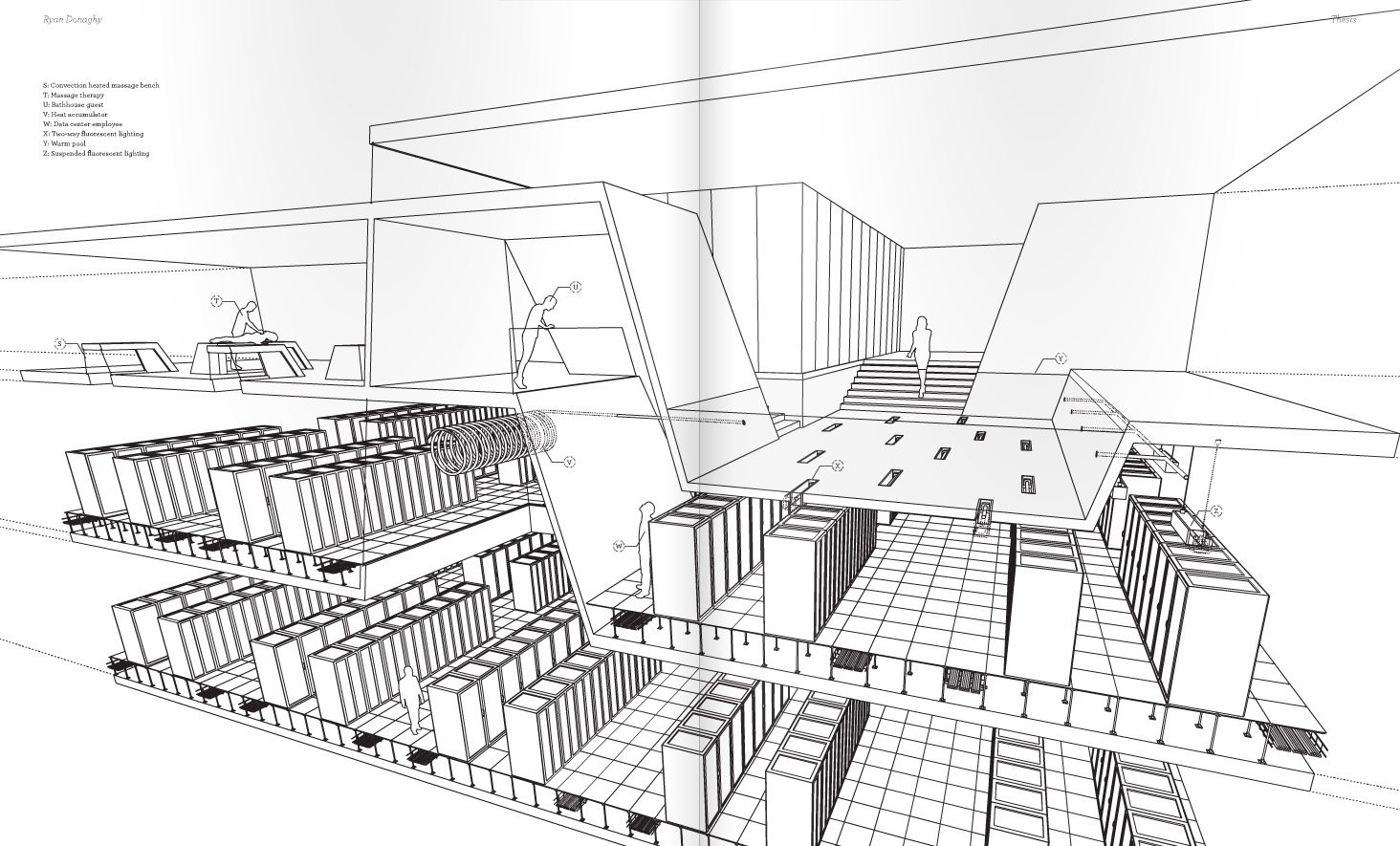

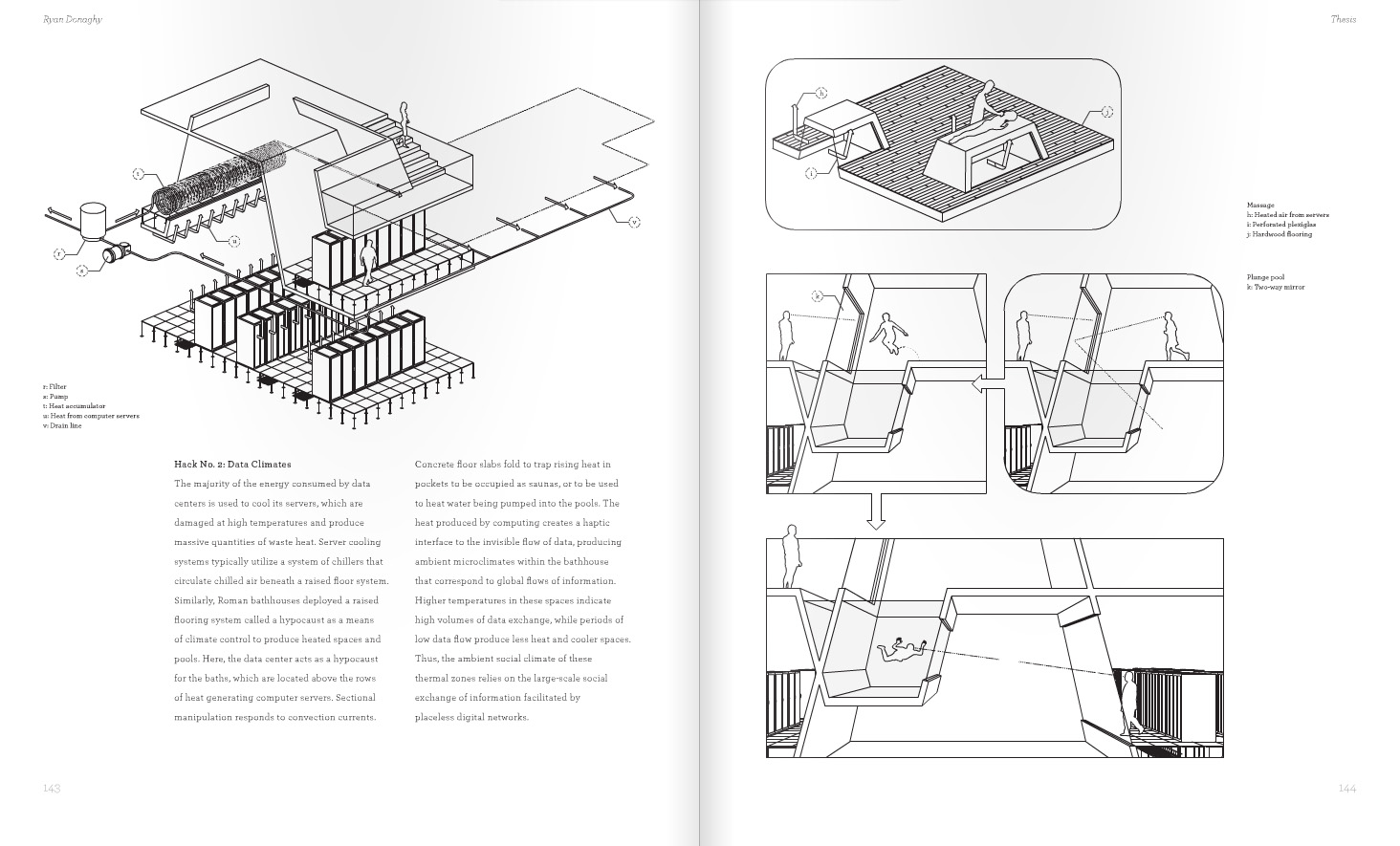

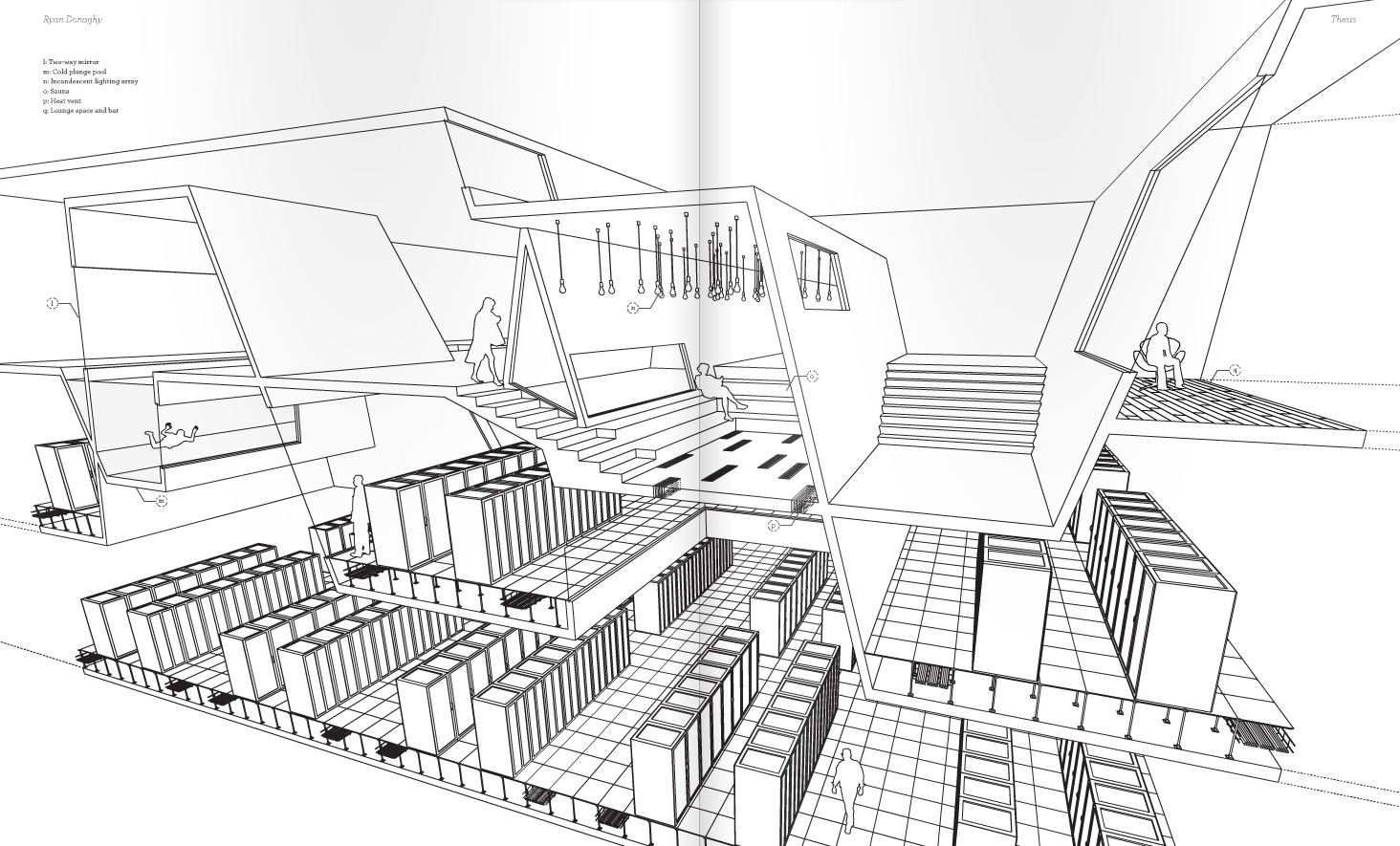

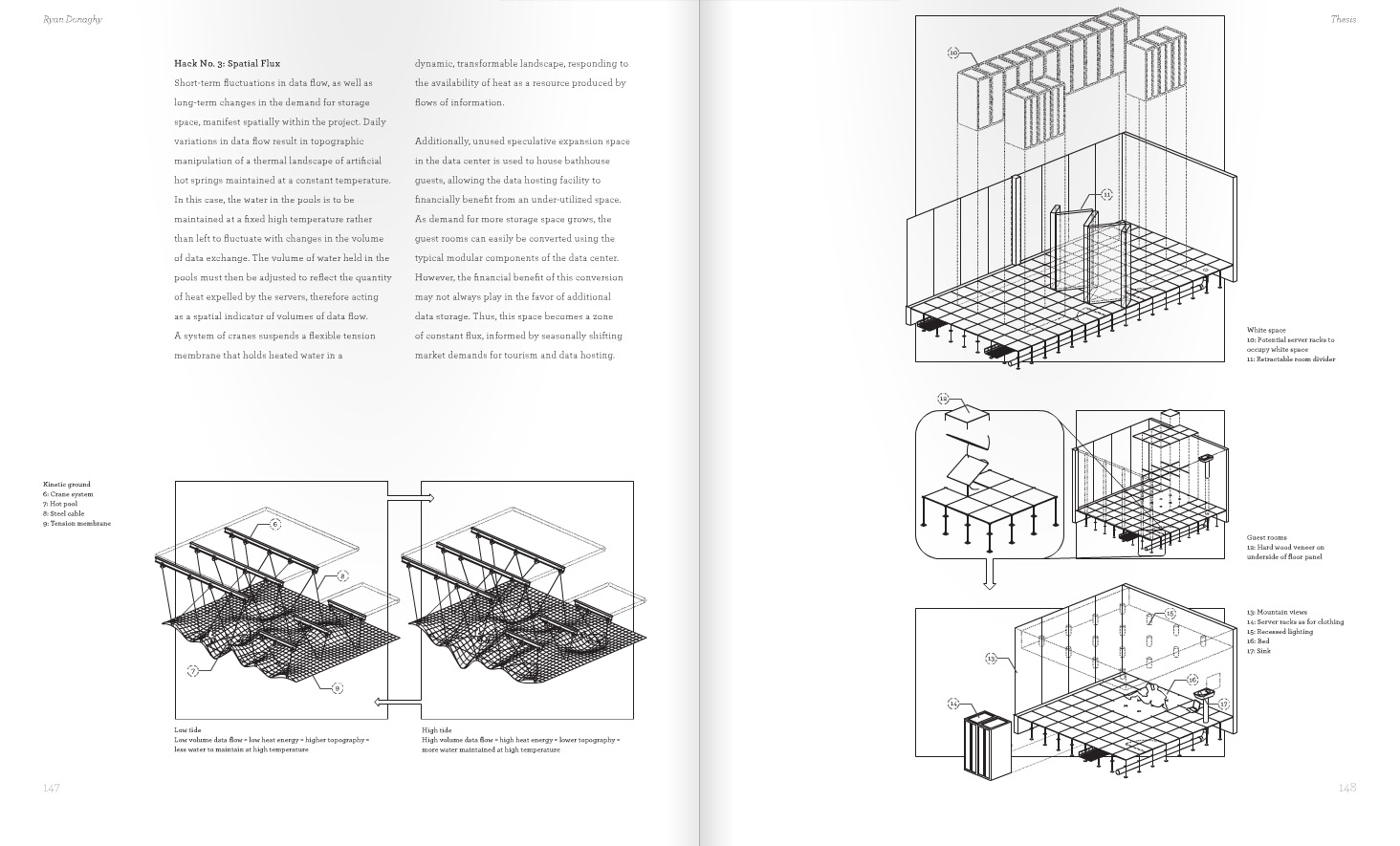

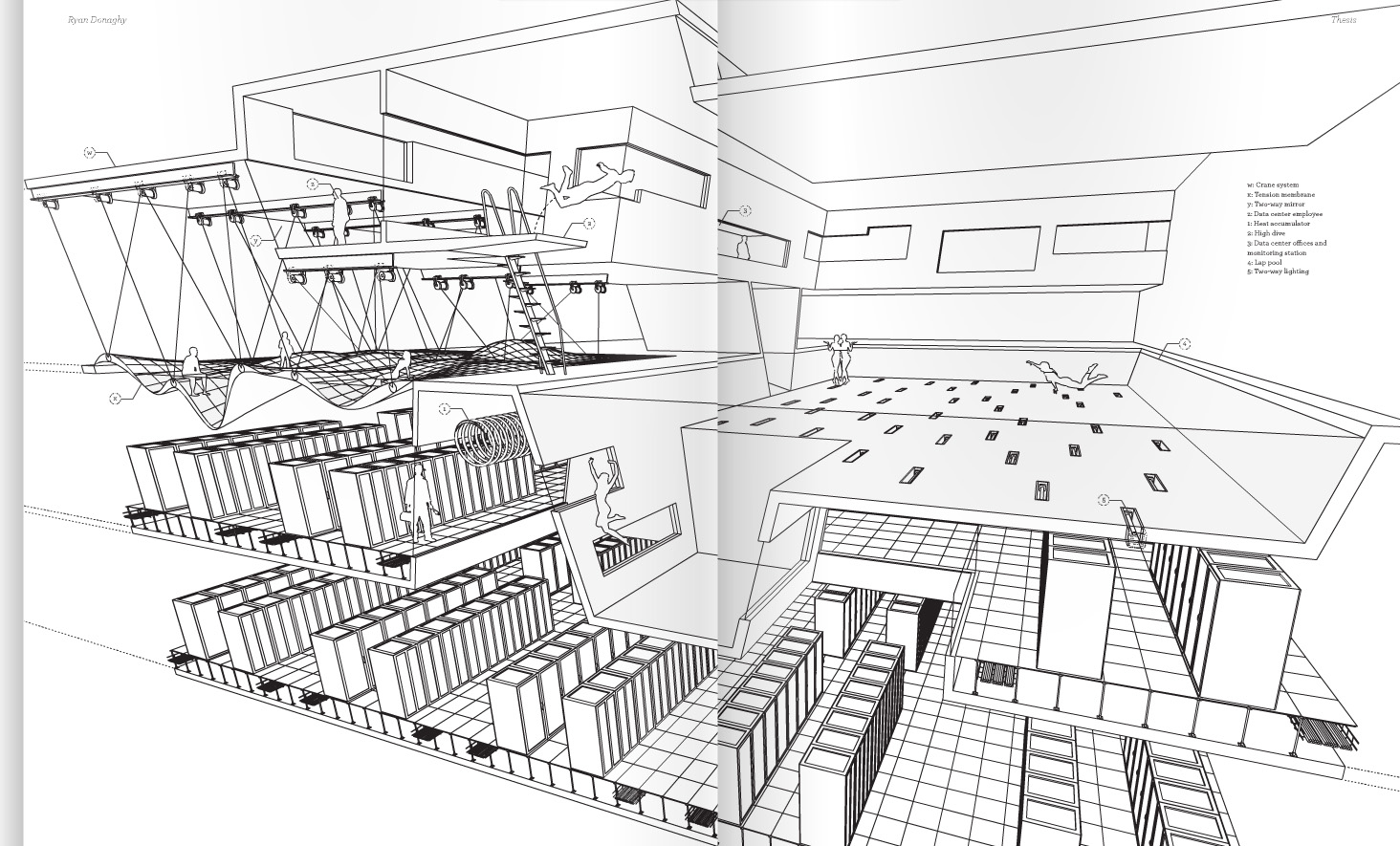

Cloud interfaces are now part of our daily experience: we use them as storage space for our music, our work, our contacts, and so on. Clouds are intangible, virtual “spaces” and yet, their efficacy relies on humongous data-centres located in remote areas and subjected to strict spatial configurations, climate conditions and access control.

Inhabiting the cloud(s) is a five days exploratory workshop on the theme of cloud interfacing, data-centres and their architectural, urban and territorial manifestations.

Working from the scale of the “shelter” and the (digital) “cabinet”, projects will address issues of inhabited social space, virtualization and urban practices. Cloud(s) and their potential materialization(s) will be explored through “on the spot” models, drawings and 3D printing. The aim is to produce a series of prototypes and user-centered scenarios.

Participation is free and open to all SAR students.

ATTENTION: Places are limited to 10, register now!

Info and registration: caroline.dionne@epfl.ch & thomas.favre-bulle@epfl.ch

www.iiclouds.org

-

Download the two briefs (Inhabiting the Cloud(s) & Montreux Jazz Pavilion)

Laboratory profile

The key hypothesis of ALICE’s research and teaching activities places space within the focus of human and technological processes. Can the complex ties between human societies, technology and the environment become tangible once translated into spatial parameters? How can these be reflected in a synthetic design process? ALICE strives for collective, open processes and non-deterministic design methodologies, driven by the will to integrate analytical, data based approaches and design thinking into actual project proposals and holistic scenarios.