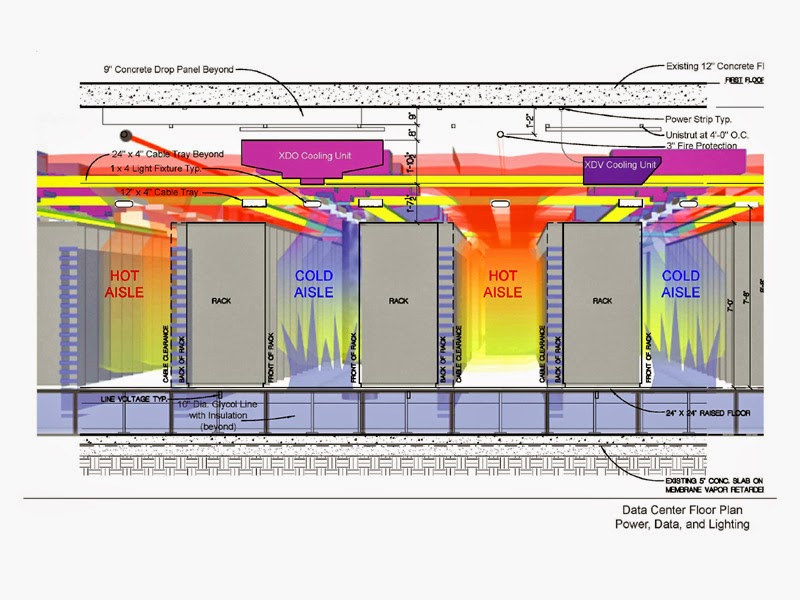

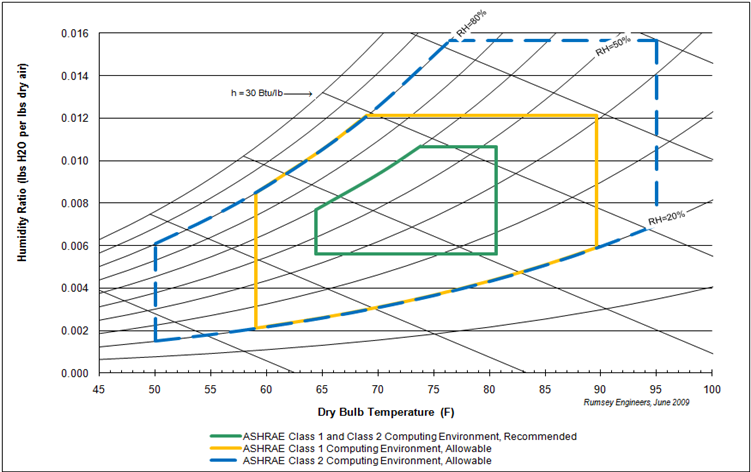

Both images taken from the website Green Data Center Design and Management / “Data Center Design Consideration: Cooling” (03.2015). Source: http://macc.umich.edu.

ASHRAE is a “global society advancing human well-being through sustainable technology for the built environment”.

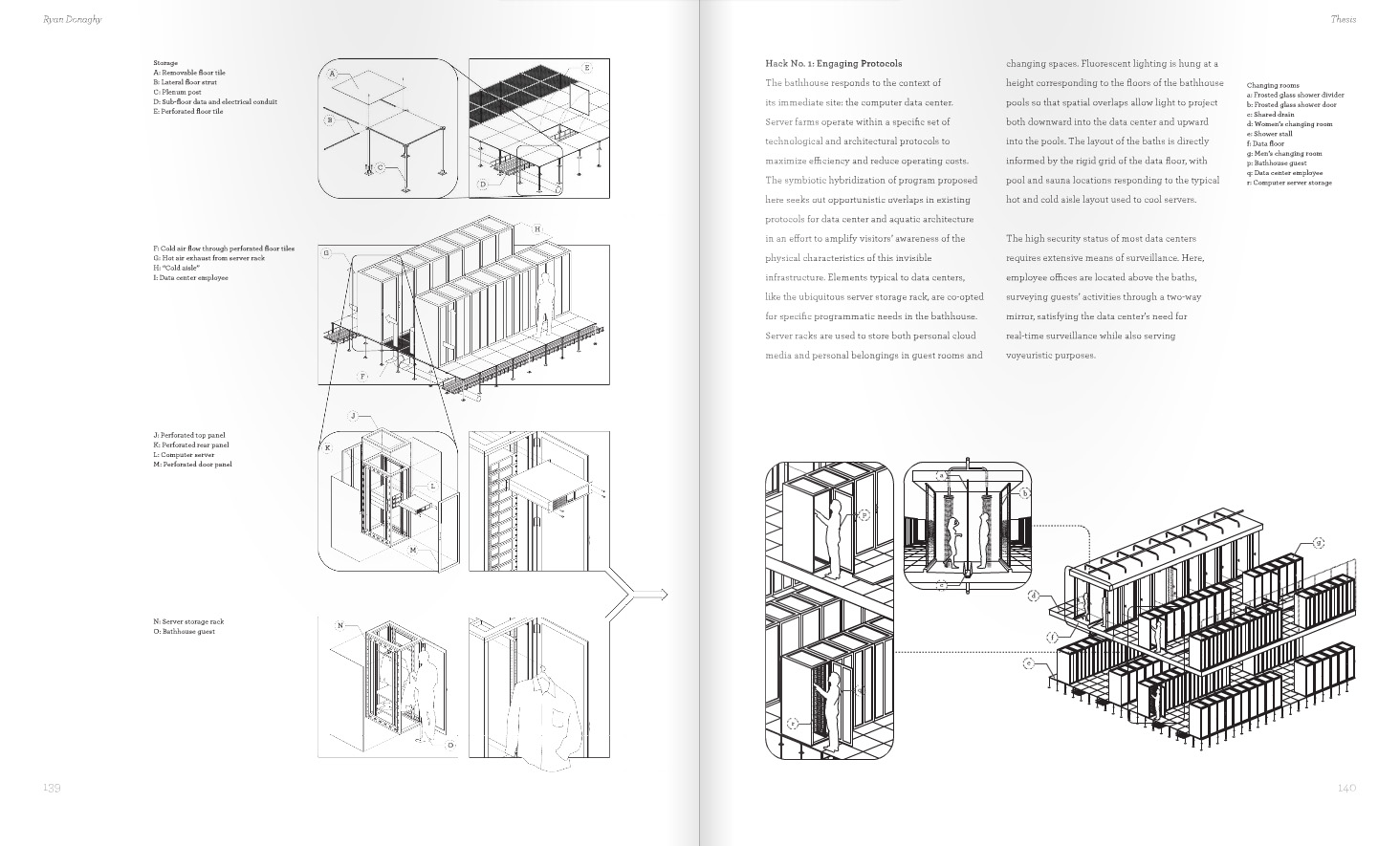

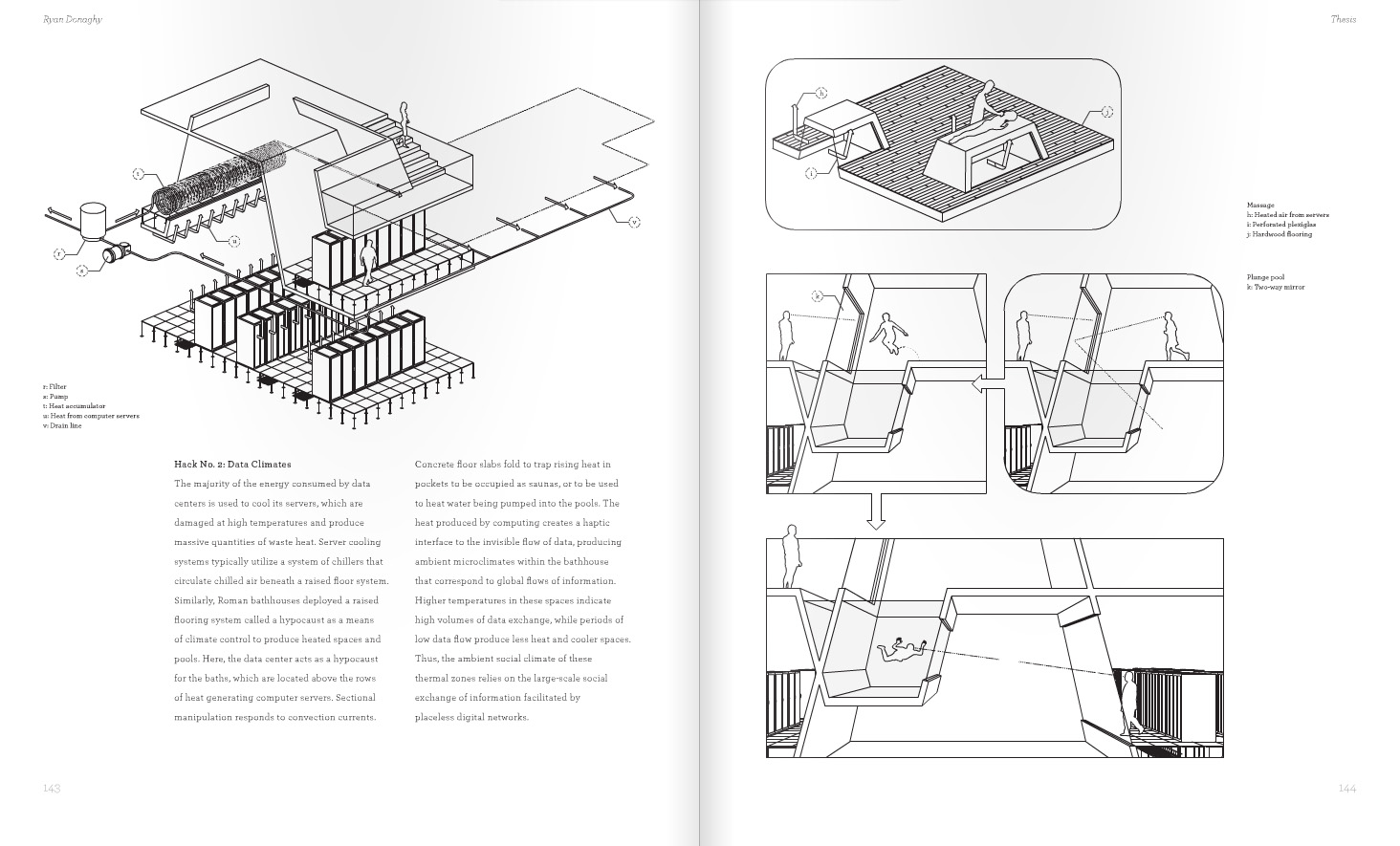

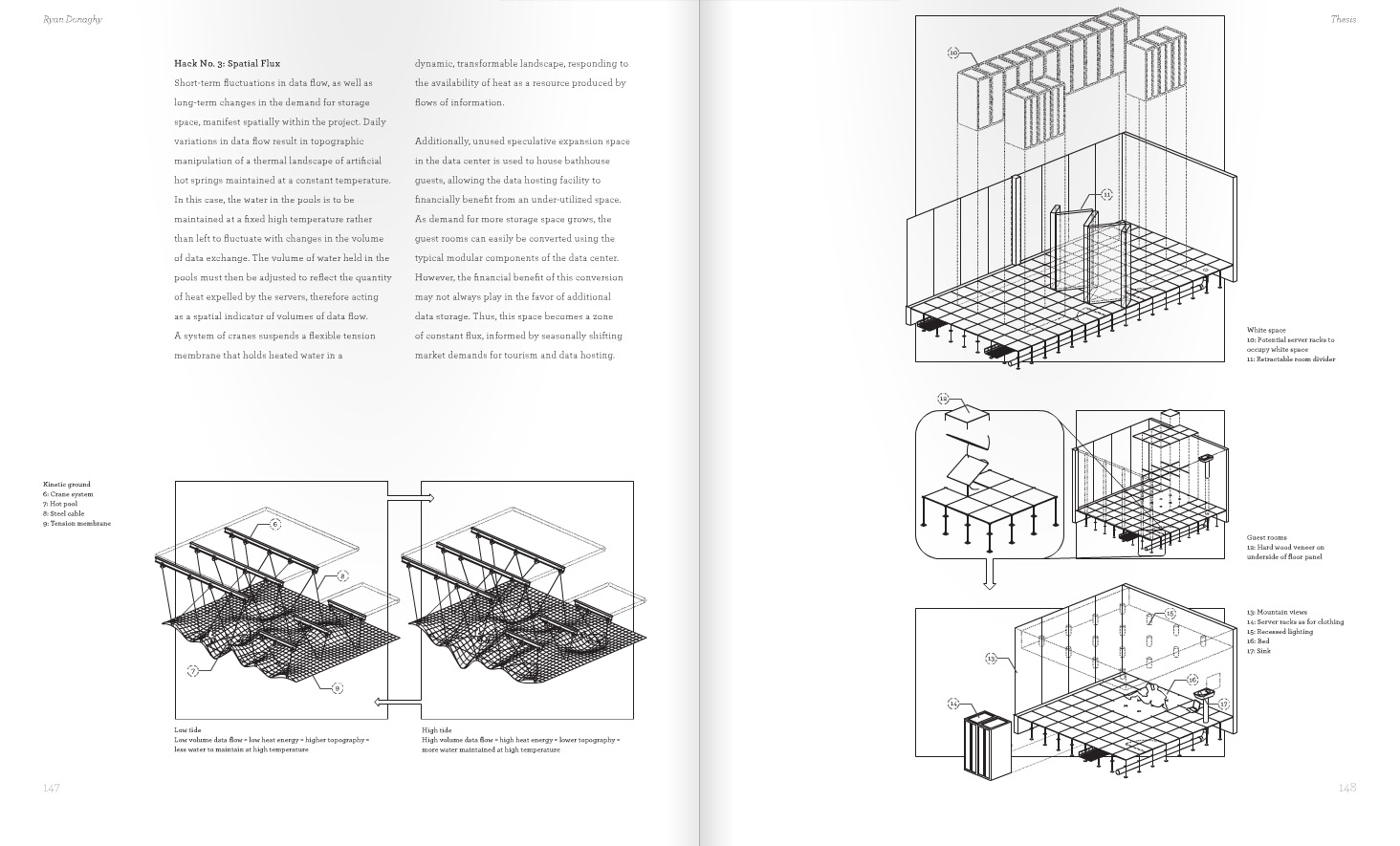

A typical question that arise with data centers is the need to cool down the overheating servers they contain. The more they will compute, the more they’ll heat, consume energy, but also will therefore be in need to be cooled down, so to stay in operation (wide range of operation would be between 10°C – 30°C). While the optimal server room temperature seem to be around 20-21°C, ~27°C for recent and professional machines (Google recommends 26.7°C).

The exact temperature of function is subject to discussion and depends on the hardware.

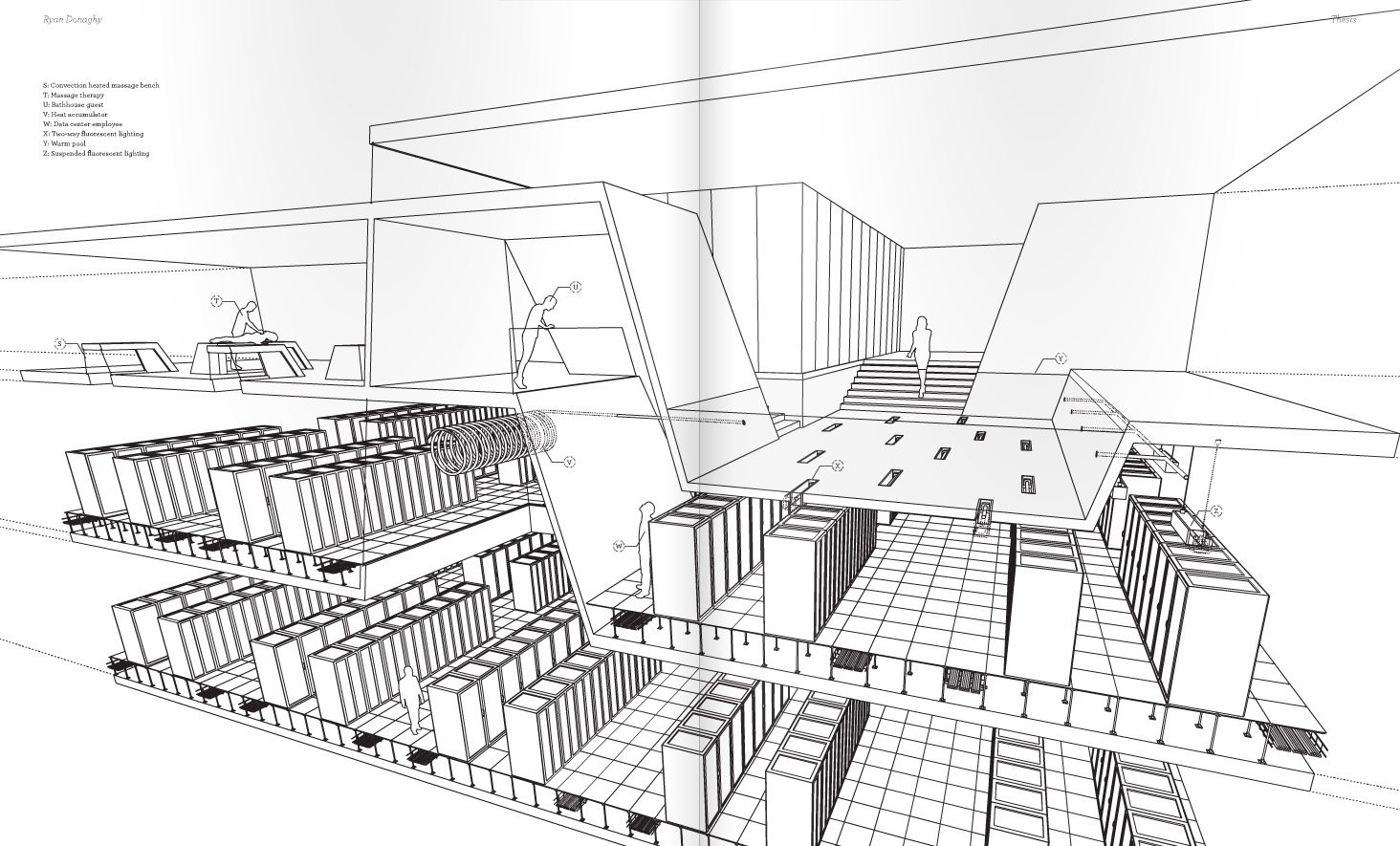

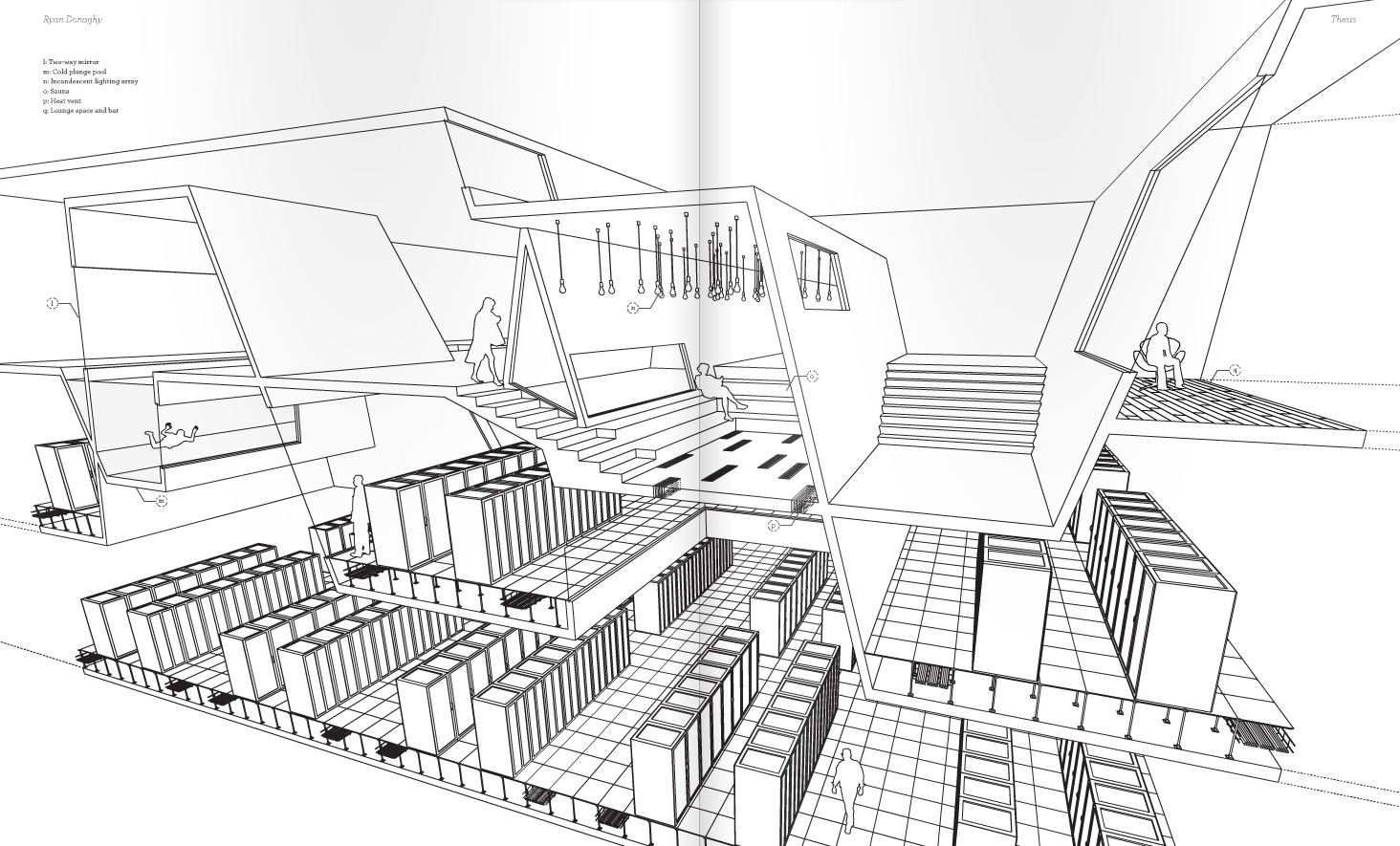

Yet, in every data center comes the question of air conditioning and air flow. In this case, it always revolves around the upper drawing (variations around this organization): 1° cold air aisles, floors or areas need to be created or maintained, where the servers will take their refreshing fluid and 2° hot air aisles, ceilings or areas need to be managed where the heated air will need to be released and extracted.

Second drawing shows that humidity is important as well depending on heat.

As hot air, inflated and lighter, naturally moves up while cold air goes down, many interesting and possibly natural air streams could be imagined around this air configuration …